Why is AI still so hard to get right in 2025?

It’s time to face six common barriers in the AI journey and then trade your compass for a GPS.

Almost twice as many people used artificial intelligence (AI) for work every week in 2024 compared to 2023. If you’re a business or IT leader, you may be just as focused on a different AI trend that started in 2024: adoption at the organizational level has been slowing down.

Slalom’s survey of 200 C-suite executives found that 69% of organizations are still in the early stages of AI adoption, focused on pilot projects and productivity gains. We’ve talked about this with a lot of leaders over the past couple of years, listening to them explain how the promise of exponential value creation with AI is “stuck.” It’s often trapped behind some combination of old systems, overwhelmed teams, and unclear business cases—challenges that are only surmountable if you acknowledge they exist. As Albert Einstein once said, “If I had only one hour to save the world, I would spend fifty-five minutes defining the problem, and only five minutes finding the solution.”

There are barriers to AI adoption that are more obvious, as well as those that lie below the surface. These more hidden barriers can be harder to find and potentially more damaging. We think you have more than an hour (or 55 minutes) to examine these barriers and then change your business and the world for the better with AI. But we also hear the “tick-tock” of the AI adoption clock getting louder as many companies’ AI journeys start to slow or stall. Here’s a deep dive into today’s AI barriers, and how to overcome them to make AI actually work (hint: your AI journey needs a GPS).

Surface-level execution barriers: What most are learning

Security, privacy, and ethical concerns were the first hurdle

A 2024 report from the Wharton School titled “Growing Up: Gen AI’s Early Years” found that 12 months after it originally surveyed senior decision makers at enterprise organizations, fewer reported caution and skepticism associated with generative AI (GenAI), and more reported positive emotions, such as “optimistic, excited, and appreciation.” While security was still reported to be a “top factor for consideration of GenAI” and customer data privacy was still seen as a “top concern,” overall perception of security challenges with GenAI generally seemed to be trending more positive.

The world has had some time to wrap its head—and guardrails—around GenAI, with many global bodies having published best practices around security, privacy, and intellectual property (IP) in 2024, if not earlier. For some organizations, security is even becoming more of a reason to use GenAI than a challenge of it. As an example, we worked with payment services company Sionic to help its teams develop and release advanced, AI-powered fraud detection supported by training and inference pipelines, synthetic data, and a rules engine. “Slalom’s team gave us a good, solid baseline and foundation that Sionic can now grow on and scale out as we move into a production footprint,” said Sionic’s CTO.

What to do about it: Don’t yet have a strategy for responsible AI in place? Take advantage of the body of research and proven best practices around security, privacy, and ethics to inform yours.

You can’t scale AI on shaky data and siloed systems

In an IDC research report that found 88% of AI pilots fail to reach production, the authors asserted that the rate “indicates the low level of organizational readiness in terms of data, processes and IT infrastructure.”

AI adoption continues to stall not because of model limitations but because most organizations aren’t ready—operationally or architecturally. Integration, accuracy, and privacy challenges stem less from GenAI itself and more from poor data management and access governance. The recent surge in investment in data access governance reflects a growing respect for the fact that success with AI depends on the strength of your organization’s data foundation.

What to do about it: If you’re struggling to scale AI, don’t skimp on your data foundation. Even if AI isn’t on your immediate roadmap, investing in your data layer will help ensure that more of your pilots make it to production when the time comes. That could mean bringing more data processing in-house, like CRISP Shared Services (CSS) did with our help, allowing it to process over 1.7 million messages daily to drive better health outcomes across 10 states and counting.

Surface-level vs. deeper AI barriers

Deeper execution barriers: What many are discovering

It’s not as if the following challenges are entirely unfamiliar to organizations. But their strategic nature means that they can remain “hidden”—or at least appear less urgent—for longer into the AI journey than operational barriers. These barriers are more about people and business results, and they require looking at not just the tech that people are using but also the mindsets they have, the business payoffs of a given POC, and the long-term vision for continuous value creation.

AI won’t get organizations far if leadership can’t agree where to go

Too often, AI efforts are driven in silos, with IT, business units, and executives pulling in different directions. A revealing 2025 survey from Writer shows that 42% of C-suite excutives believe AI adoption is actively creating organizational rifts. The disconnect seen in Writer’s research runs deep: 71% of executives report AI applications being created in silos and 68% note AI-induced tension between IT teams and other business units.

This fragmentation hampers the potential benefits of AI, leading to inefficiencies and missed opportunities. The real challenge is leadership alignment: Who’s in control of AI strategy, who’s being left out of the process, and where do different functions’ seemingly competing visions for AI actually overlap?

What to do about it: When you create cross-functional leadership to drive AI transformation, you go places. We’re seeing organizations start to realize that successful AI implementation requires a more collaborative approach than other technology integrations, as evidenced by our research showing that organization-wide AI initiatives led by cross-functional teams jumped from 5% in 2023 to 52% in 2024.

People’s skills—and not just “AI skills”—are more important than their job titles

In its Future of Jobs Report 2025, the World Economic Forum (WEF) projects that 39% of existing skill sets will transform or become outdated by 2030. This fundamental reshaping of work—largely spurred by advancements in AI—demands both technical and nontechnical capabilities. Workers will almost certainly need different technical skills in five years, but one not-so-technical skill will never get old: critical thinking.

Critical and/or analytical thinking has become the cornerstone of success. The WEF finds that seven out of 10 companies consider analytical thinking essential in 2025, while our year-over-year AI research shows it’s growing even more indispensable, with critical thinking and learning agility now outranking pure technical skills in executives’ priorities. Without critical thinking and learning agility, workers can’t be as easily reskilled and redeployed when AI inevitably disrupts traditional organizational charts and job titles.

What to do about it: Organizations are responding to the demand for both technical and nontechnical skills with unprecedented investment in training. Is your company one of them? Slalom’s success stories put the return on this investment into context, demonstrating what’s possible when organizations develop a people- and skills-centered approach to AI adoption.

A major media company made 15%-40% productivity gains after achieving 95% GitHub Copilot adoption co-facilitated with Slalom.

Takeda Pharmaceuticals achieved 91% higher AI proficiency levels and identified 75% more daily use cases for AI with our people-centered AI consulting.

There’s (way) more than one way to measure value from AI

Nearly all (97%) of 600 data leaders surveyed by Informatica reported difficulty demonstrating GenAI’s business value. This challenge is compounded by the rush to show ROI, which is creating its own set of problems. As IDC’s Ashish Nadkarni noted to CIO.com, “Alarm bells are going off and people are willing to bend the rules on what ROI means.” This pressure to quickly prove ROI can lead to short-sighted decisions and misaligned priorities, ultimately undermining the true potential of AI.

What to do about it: Instead of panicking to show ROI, opt for an AI success scorecard that transcends traditional ROI metrics, including revenue as well as measurements like:

Speed: The Florida Division of Emergency Management uses AI to aid first responders 90% faster, helping get lifesaving resources to communities sooner.

Experience: United Airlines uses AI to customize 35% more flight updates for travelers and resolve inquiries faster, driving higher customer satisfaction.

Efficiency: Jaja Finance uses AI to free up 30% to 40% more time for customer service teams, who can reinvest that time in addressing complex queries.

AI value fades fast if you don’t redeploy it

The data on AI-assisted productivity shows remarkable consistency. Goldman Sachs research gauges a 25% average productivity increase from AI use. The Federal Reserve Bank of St. Louis calculates 33% gains per hour of GenAI use. Our experience corroborates improvements in this range.

As productivity gains from using AI get more predictable, the focus of leaders—especially those responsible for AI adoption—needs to shift from short-term savings to strategic reinvestment of time and resources for sustainable growth. Organizations don’t need to stop what they’re doing to transform; they can do it continuously by reinvesting back in their plans.

What to do about it: Our forum with more than 300 executives in early 2025 identified three critical horizons for a balanced innovation portfolio: the first of immediate efficiency gains, the second of disruptive growth opportunities, and the third of long-term transformation capabilities.

Recalculating route: Your AI journey needs a GPS, not a compass

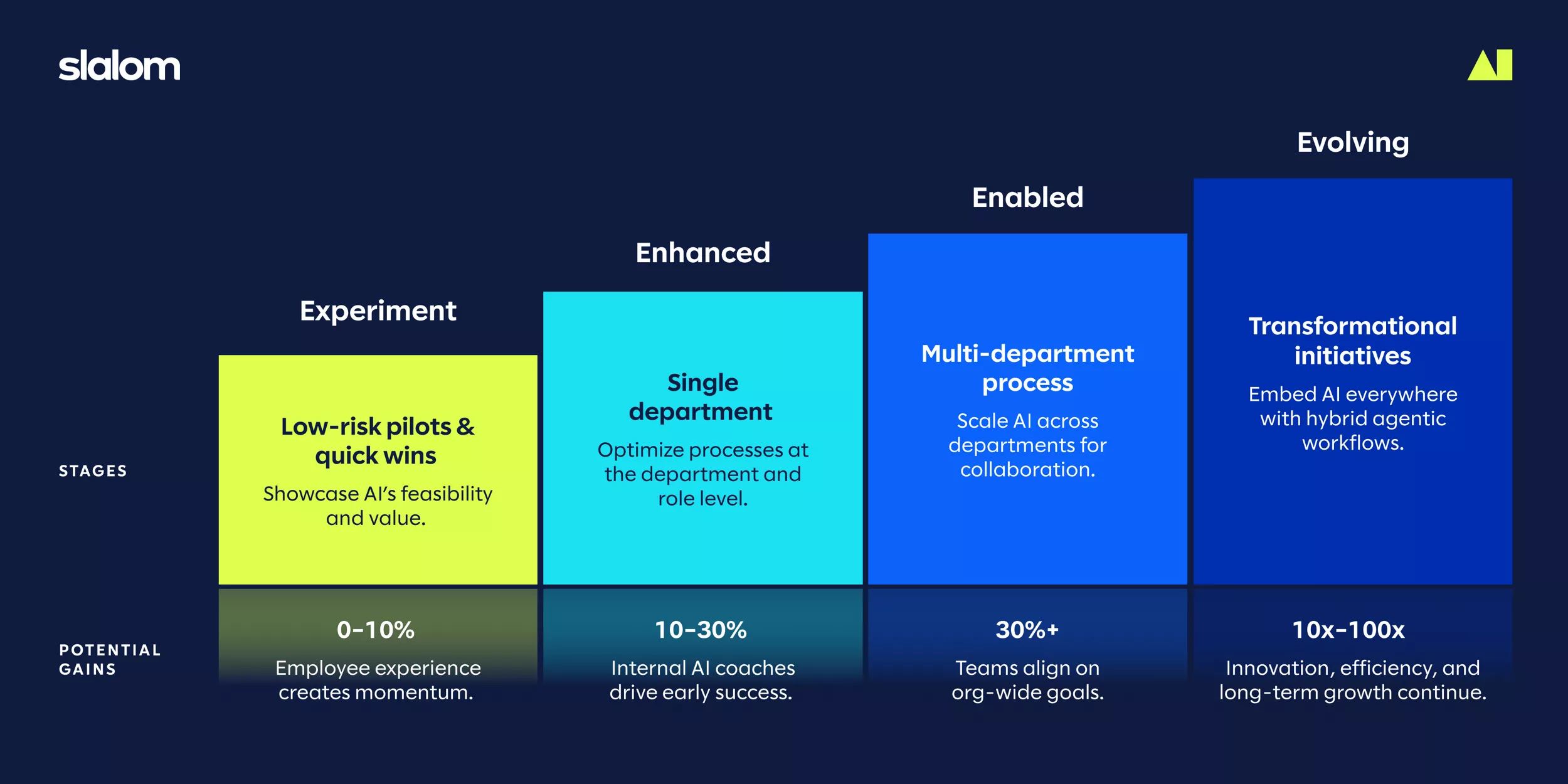

The three horizons of short-term gains, disruptive growth, and long-term transformation resemble the progression many organizations follow in their AI journeys. Early on, it’s perfectly fine to focus on targeted experiments to validate AI’s potential. But as companies mature their capabilities, they must expand toward enhanced operations and enterprise-wide transformation to realize AI’s full promise. Failing to adapt to these changes results in a loss of competitiveness, growth, and customer trust, risking obsolescence in a rapidly evolving landscape.

Stages of the AI journey

AI success requires precise navigation through both familiar and emerging challenges. Understanding these barriers—from surface-level security and data integration to deeper challenges of leadership alignment, workforce development, and value measurement—gives organizations the clarity needed to overcome them. With this understanding, companies can shape their path forward with confidence. Much like a GPS system recalculating based on real-time conditions, organizations must continuously adjust their approach. Each recalibration strengthens their capacity to learn, adapt, and advance toward transformative impact.

“The technology movements of the past have required a compass—like go East or go West,” says Ali Minnick, general manager of Customer Outcomes & Solution Innovation at Slalom. “With AI, it is much more of a GPS system, where we have to navigate a pretty dynamic journey to the future with our clients and take their employees along this incredible ride in an engaging way.”

1Jeremy Korst, Stefano Puntoni, and Mary Purk. "Growing Up: Navigating Generative AI’s Early Years – AI Adoption Report." (Wharton Human-AI Research, October 21, 2024), https://ai.wharton.upenn.edu/focus-areas/human-technology-interaction/2024-ai-adoption-report/.